Using AI to Detect Cheating in Chess

Introduction

In recent years, chess has become massively popular. Especially online, Chess.com is hosting

over ten million games every day. However, this influx of new players also brings about an influx of cheaters. For traditional

in-person tournaments it is more difficult to cheat during a game, with arbiters patrolling

the playing hall and metal detectors scanning for any devices. When playing online, it is

completely unmoderated. Anyone who can play a game of chess online has the ability to

cheat.

There are two main types of cheating. The first is naive cheating, using a computer and copying every single move it makes.

This is the same as getting a computer to play the game for you. The second is more subtle, intermittent cheating is where

you only cheat for some moves. This is harder to detect, because you might lose some games, make mistakes and play poorly, but still be a cheater.

Detecting naive cheating consistently would be great, detecting intermittent cheating consistently would be amazing.

Chess Engines

People cheat using engines, which are programs designed to play chess far better than humans can.

The main objective is therefore to recognise moves which only engines would play. An engine will take a position and evaluate

it numerically, deciding which player is winning. An evaluation of 0 means the game is equal, but a positive number means white has an advantage and a negative number

means black has an advantage. This evaluation is in terms of centipawns, so +100 means white has the same advantage as having an extra pawn. -250 means black has a relative advantage

of having 2.5 extra pawns. It doesn't strictly depend on material though, white could have less pieces but still be +300.

By extension, moves can be evaluated. The evaluation of a move is equal to the evaluation of the resulting position after playing it. Doing this for every move, we essentially rank all the possible moves. The best possible move will have an evaluation equal to the current position because you can't fabricate an advantage out of thin air, if the position is equal the best you can do is remain equal. You gain an advantage by capitalising on a mistake by your opponent.

By extension, moves can be evaluated. The evaluation of a move is equal to the evaluation of the resulting position after playing it. Doing this for every move, we essentially rank all the possible moves. The best possible move will have an evaluation equal to the current position because you can't fabricate an advantage out of thin air, if the position is equal the best you can do is remain equal. You gain an advantage by capitalising on a mistake by your opponent.

Detecting Cheating

To actually detect cheating, the obvious approach is to evaluate the best move for each position, and see if the player played the best move every time. It seems like this

should work for naive cheating at least. Unfortunately this doesn't work at all, an engine might not suggest the same move when run on a different computer. A different

engine may suggest a different best move. The player might choose to play the second-best move to throw us off.

We also can't base it off of how many near-best moves were played, because chess masters consistently play games with every move being almost as good as the best move.

There was even a game played by Paul Morphy before computers were invented, and when analysed today every move was classed as a top engine move.

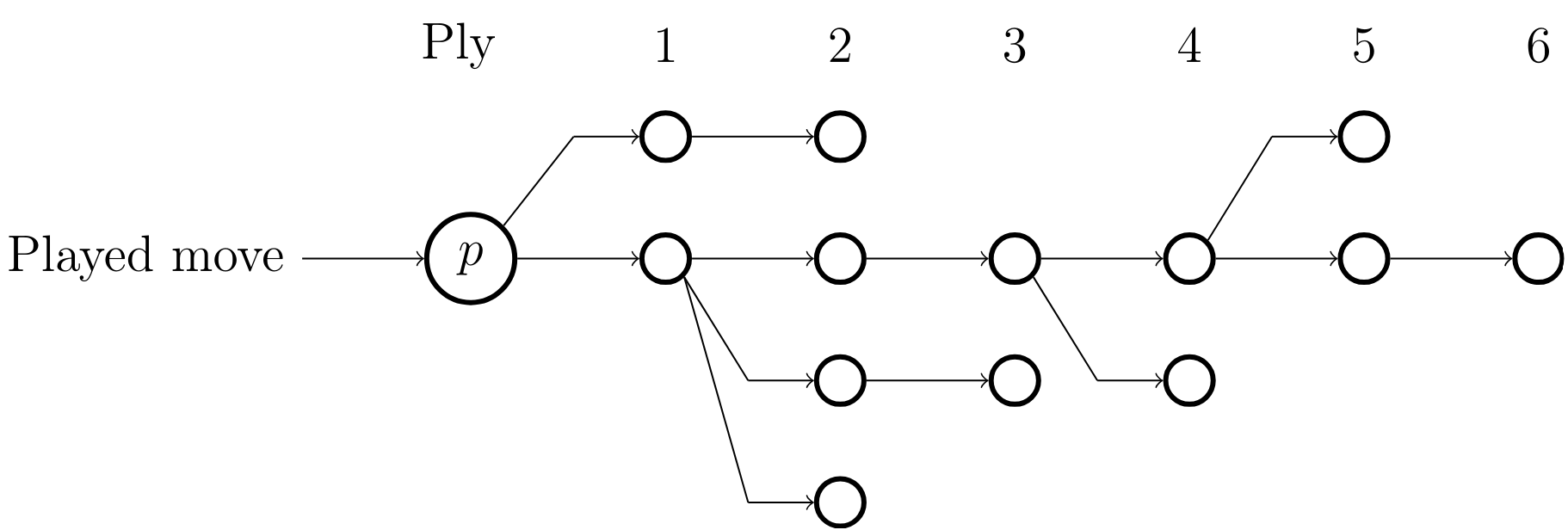

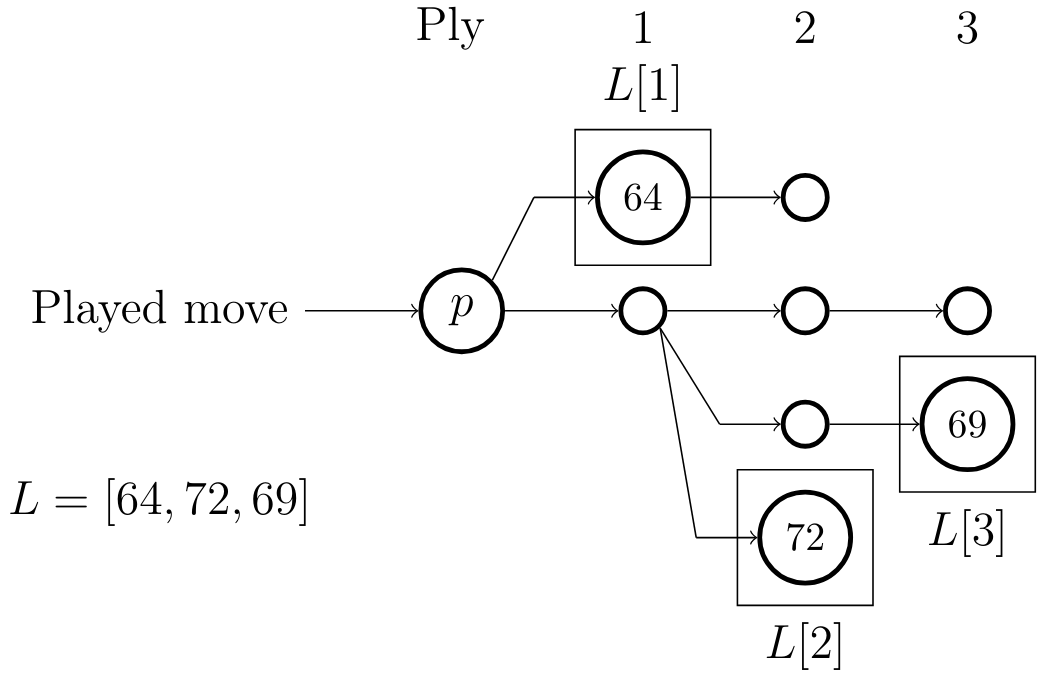

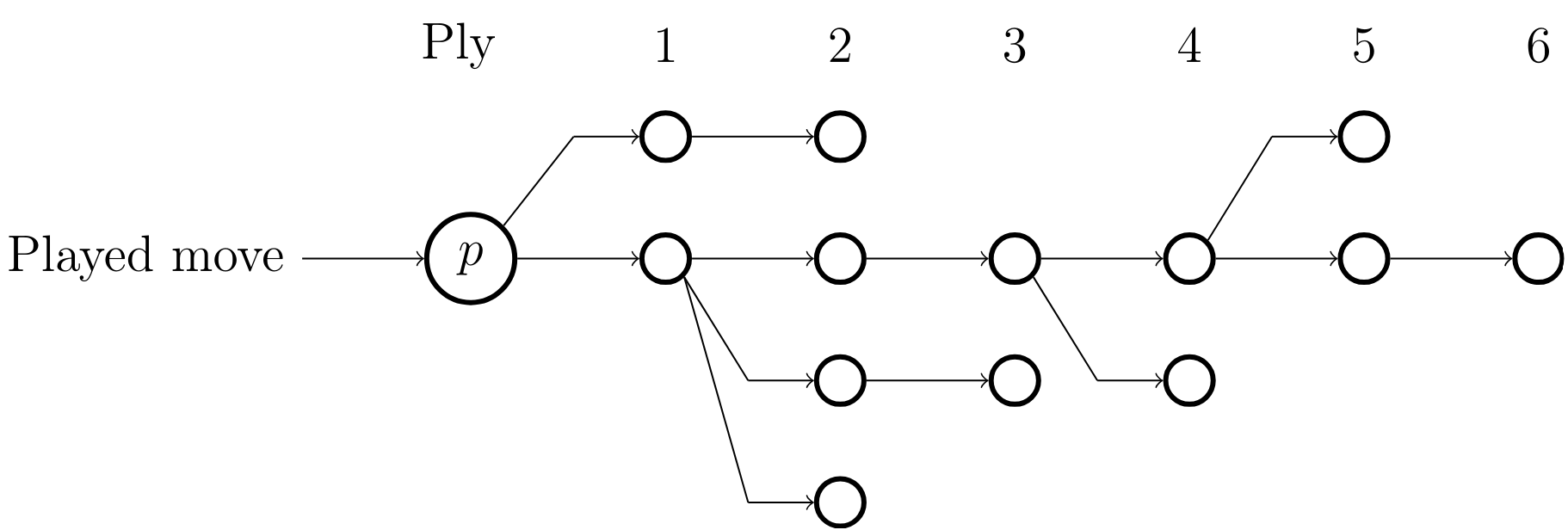

The reason for this is that an engine's evaluation is too noisy and unreliable to work with and there isn't a strict equivalence between good moves and engine moves. Instead, we can look at how the engine arrived at its final evaluation. The method for generating the evaluation is an iterative deepening search, so we are able to observe the search tree from a position p and take snapshots at different points.

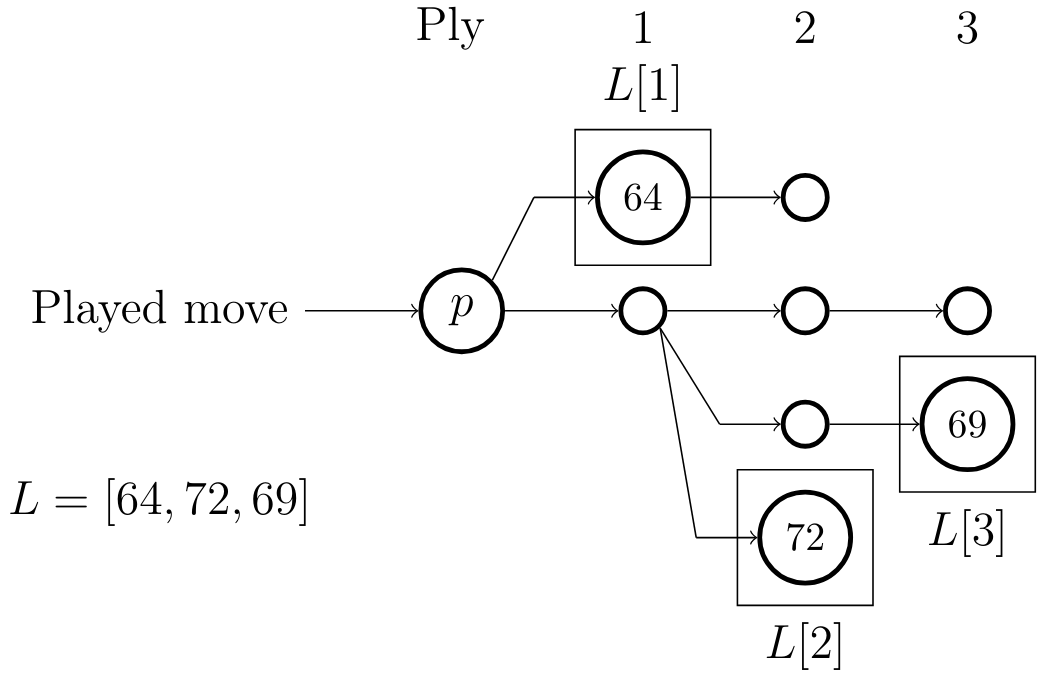

The idea here is that we are looking to see how hard it was to find the move. If the engine immediately spots it on a very low depth, it's likely obvious so it's not suspicious if a player finds it. If the move seemed like a bad one up until deep in the search tree where it suddenly became good, then it's more likely to be one that only a computer would play. At each depth (ply), we can record the best available move and its evaluation forming a vector L.

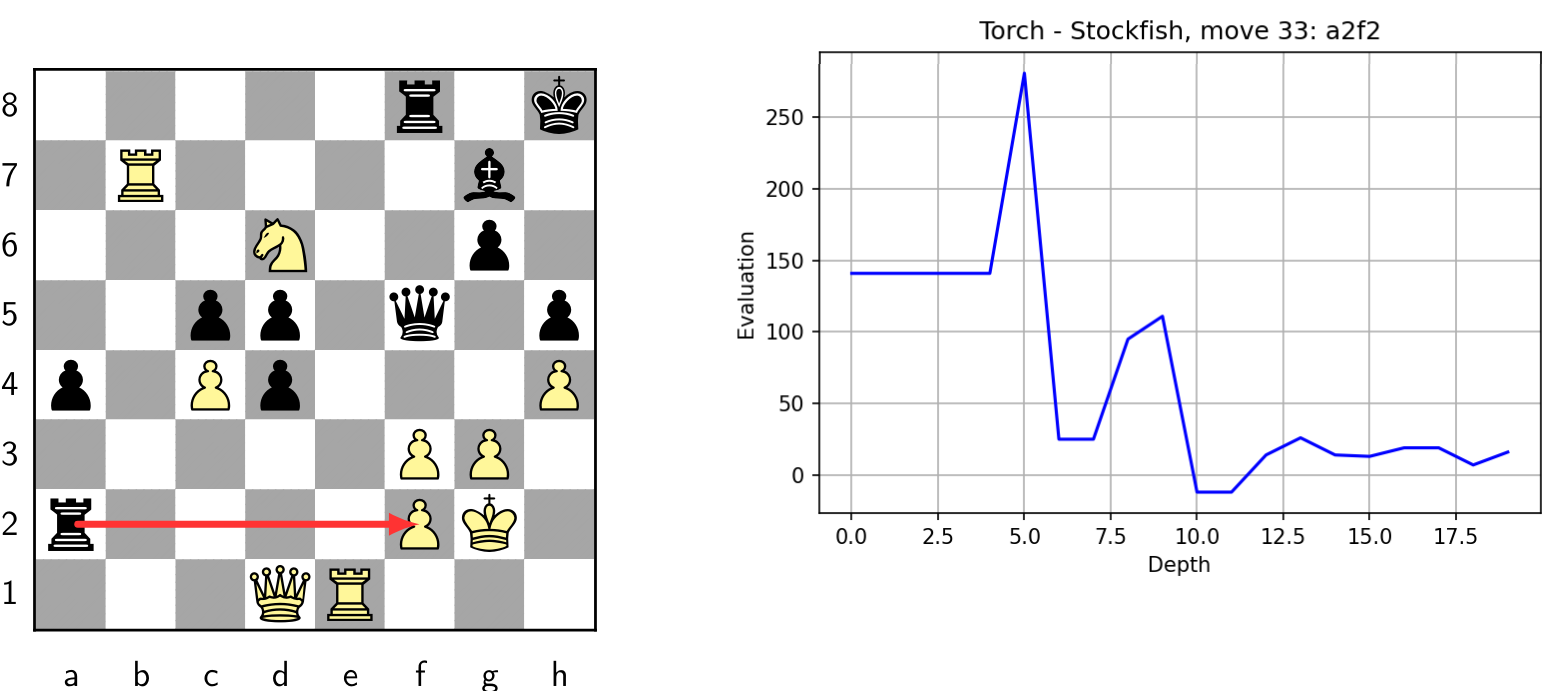

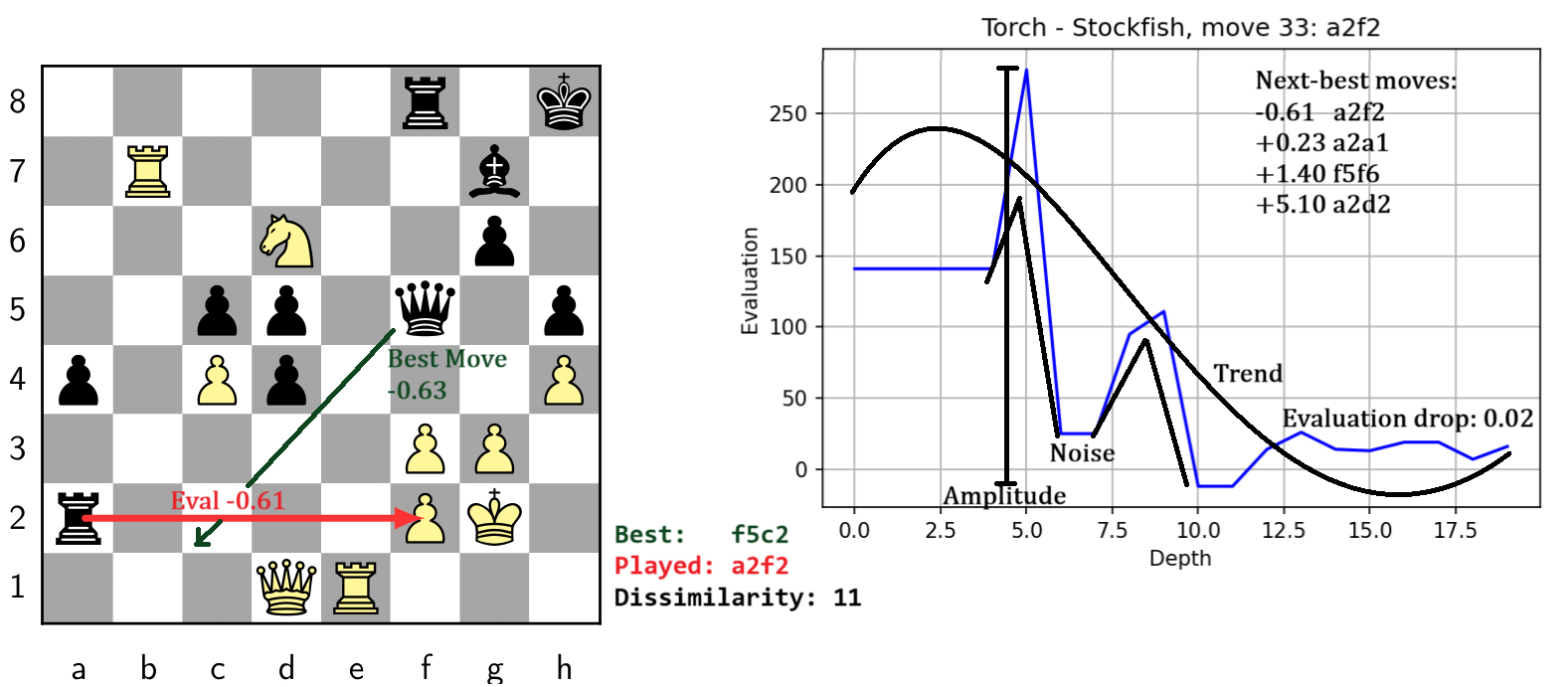

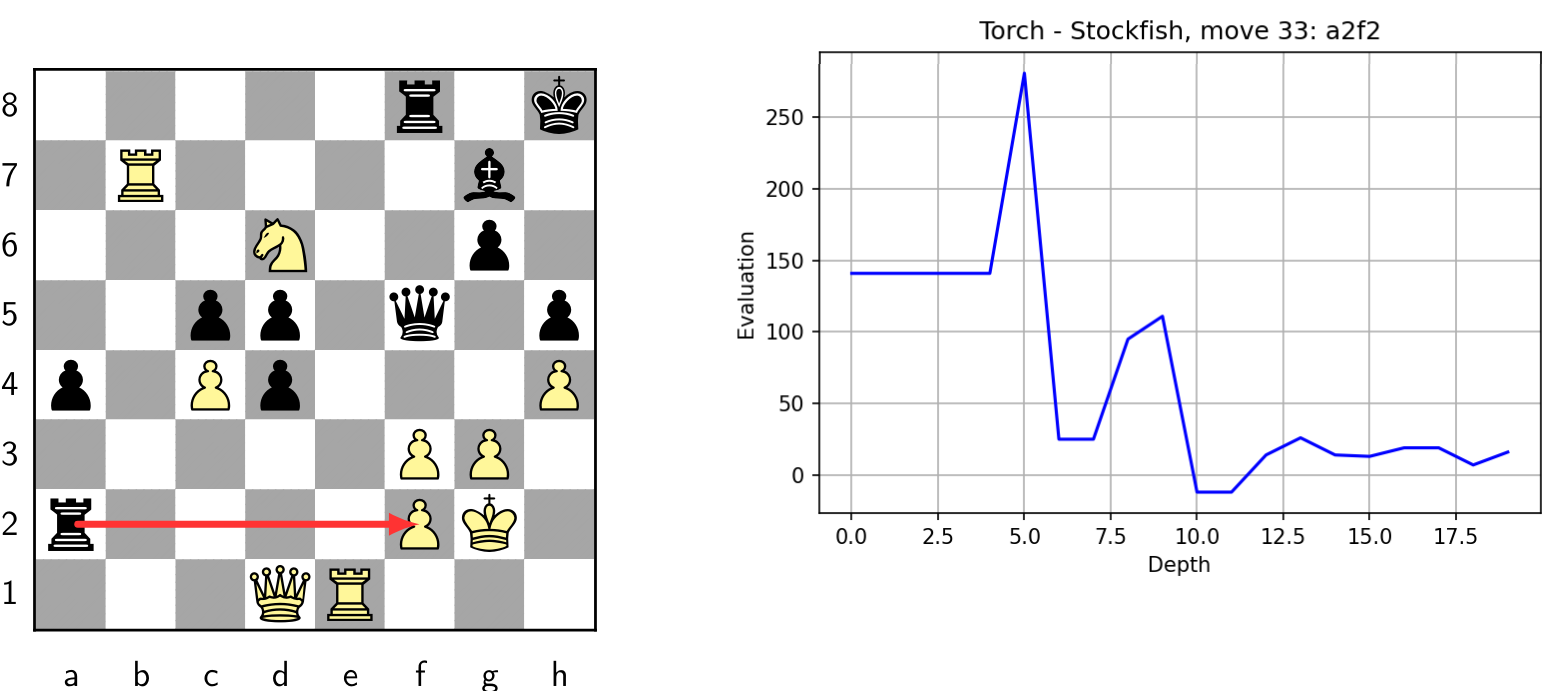

This example goes to depth 3, but we can go much deeper to depth 20 or 30 and generate a much bigger vector. This is where the machine learning component comes into play, because analysing the trend in the values gives a lot of information. Looking at a strange move in a real game between two computers (Stockfish and Torch), we see that it has a distinct trend when we plot L.

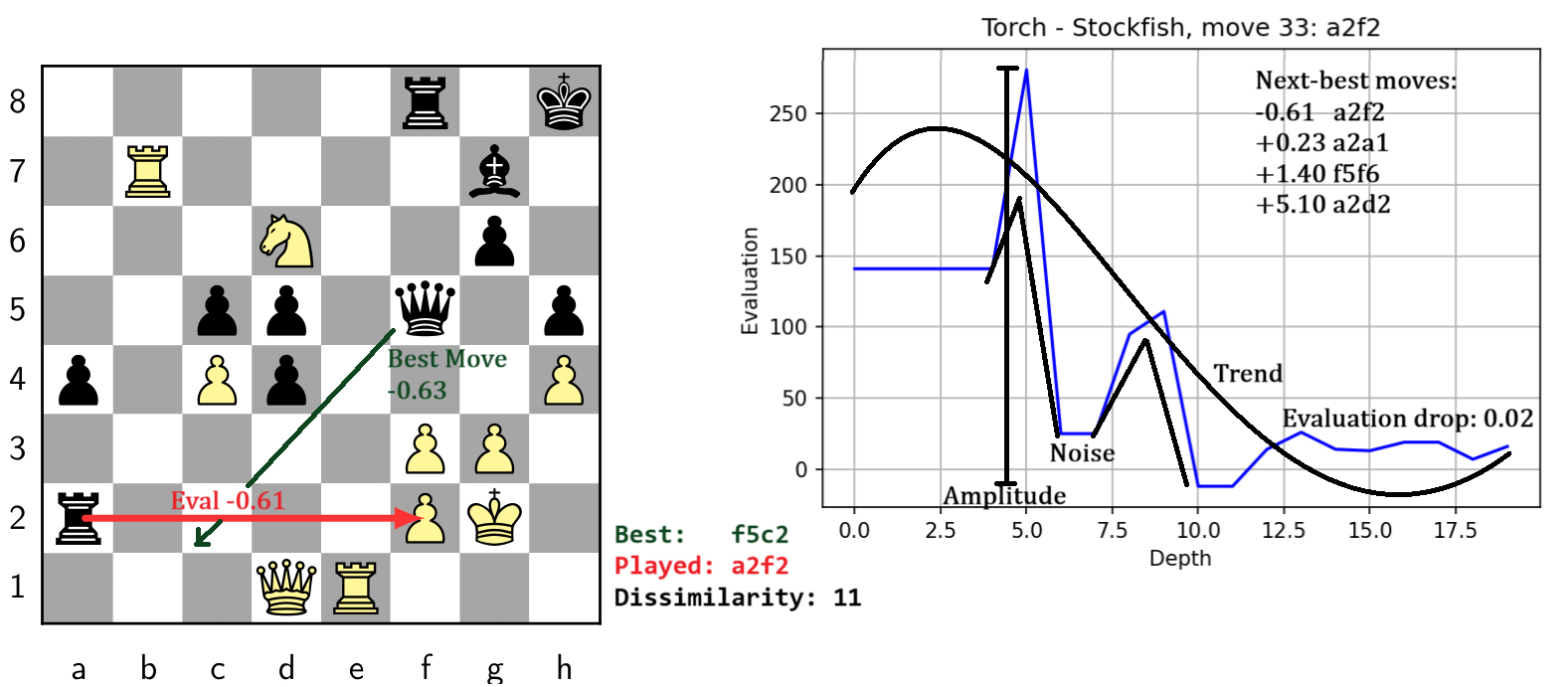

Remember positive is bad for black, so this move seemed terrible up until around depth 10, only then it was seen as good. We use support vector classification to identify the trend in the values, which is fed into a second XGBoost model using 17 metrics about the current position, such as the evaluation of the best move and what the next-best moves were and also other data about the trend itself (how noisy it is and the amplitude). We end up with a final binary classifier which can take a move as an input and classify it as either a human move or an engine move, allowing us to attempt to detect cheating on a move-by-move level (to tackle both intermittent and naive cheating).

The reason for this is that an engine's evaluation is too noisy and unreliable to work with and there isn't a strict equivalence between good moves and engine moves. Instead, we can look at how the engine arrived at its final evaluation. The method for generating the evaluation is an iterative deepening search, so we are able to observe the search tree from a position p and take snapshots at different points.

The idea here is that we are looking to see how hard it was to find the move. If the engine immediately spots it on a very low depth, it's likely obvious so it's not suspicious if a player finds it. If the move seemed like a bad one up until deep in the search tree where it suddenly became good, then it's more likely to be one that only a computer would play. At each depth (ply), we can record the best available move and its evaluation forming a vector L.

This example goes to depth 3, but we can go much deeper to depth 20 or 30 and generate a much bigger vector. This is where the machine learning component comes into play, because analysing the trend in the values gives a lot of information. Looking at a strange move in a real game between two computers (Stockfish and Torch), we see that it has a distinct trend when we plot L.

Remember positive is bad for black, so this move seemed terrible up until around depth 10, only then it was seen as good. We use support vector classification to identify the trend in the values, which is fed into a second XGBoost model using 17 metrics about the current position, such as the evaluation of the best move and what the next-best moves were and also other data about the trend itself (how noisy it is and the amplitude). We end up with a final binary classifier which can take a move as an input and classify it as either a human move or an engine move, allowing us to attempt to detect cheating on a move-by-move level (to tackle both intermittent and naive cheating).

Results

After hyperparameter tuning, the XGBoost binary classifier had an accuracy of 77.32% on the test data

with an ROC area of 0.8315. Given the nature of the problem, we can't take a single move from a single game

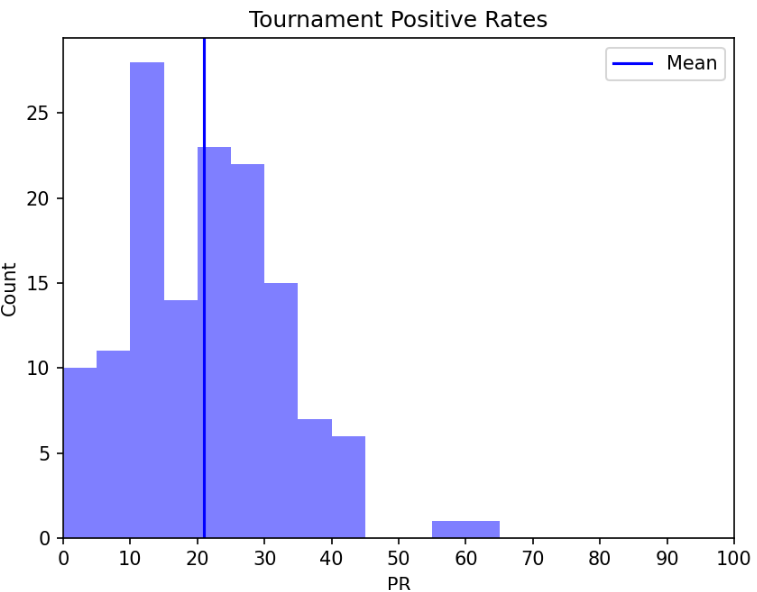

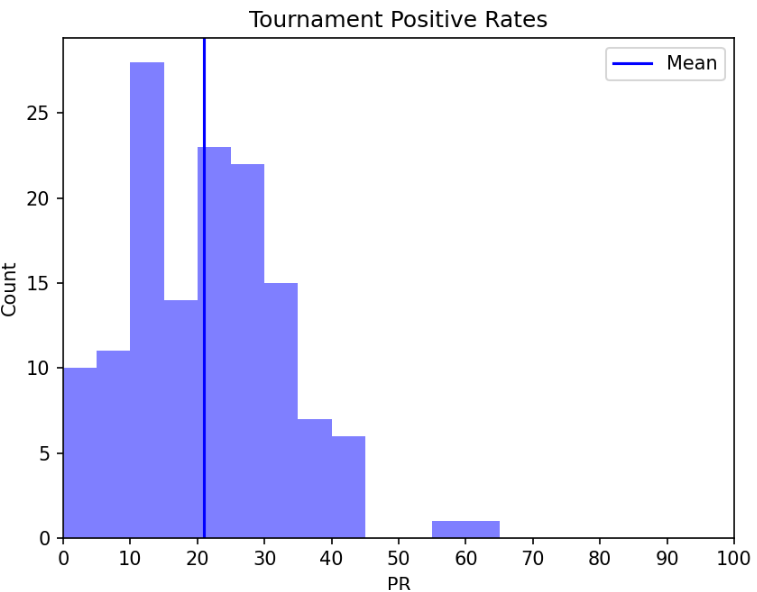

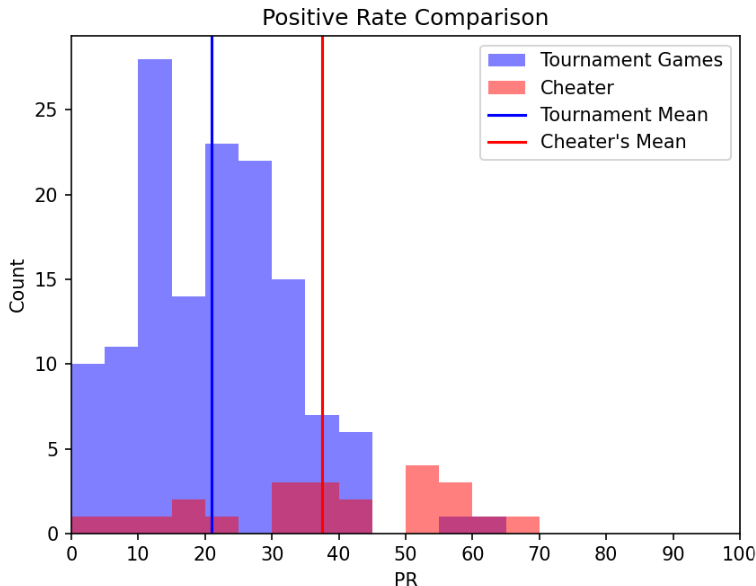

and base accusations off of it as there will be a base level of false positives. From testing games of Optiver's

UK Chess Championship, this base positive rate (PR) was estimated to be around 21%. That is to say, for a legitimate player, we

expect 21% of their moves to be incorrectly classed as engine moves.

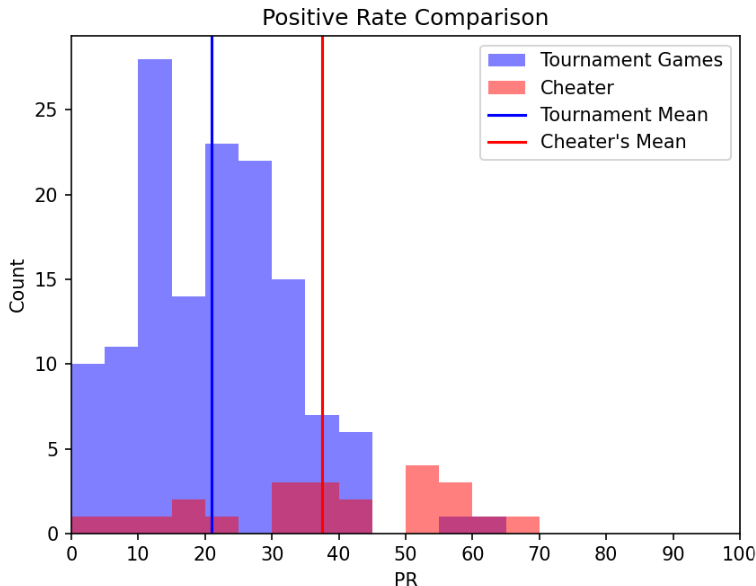

Each data point in the histogram above is the mean positive rate across all moves in a single game. We can compare this to

the histogram of positive rates of games involving a cheater who was banned from Chess.com.

Each data point in the histogram above is the mean positive rate across all moves in a single game. We can compare this to

the histogram of positive rates of games involving a cheater who was banned from Chess.com.

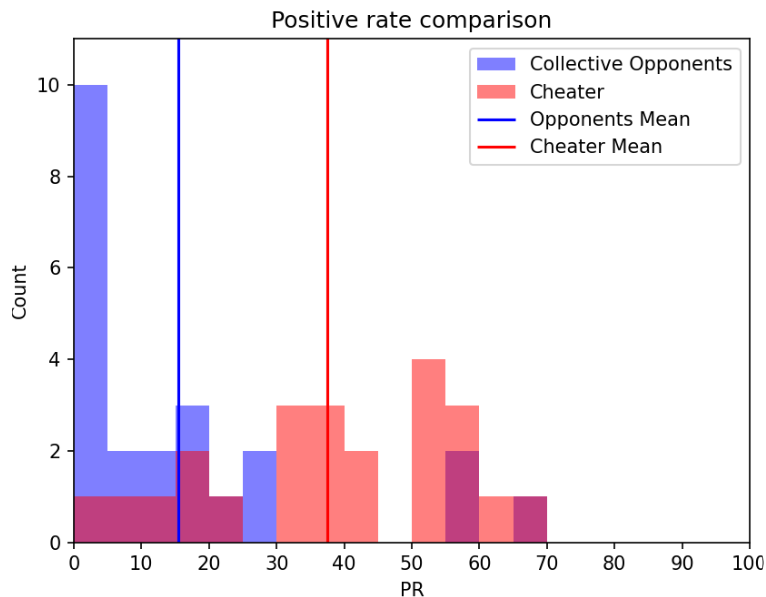

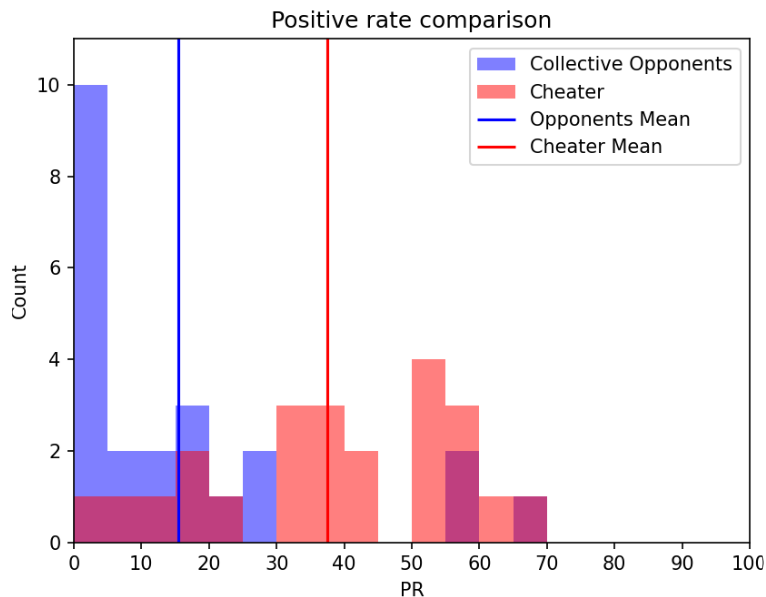

This is a clear difference, but we can go even further and compare the moves of the cheater to the moves of their

collective opponents. So now each data point is the average positive rate of the moves of one player in one game.

This is a clear difference, but we can go even further and compare the moves of the cheater to the moves of their

collective opponents. So now each data point is the average positive rate of the moves of one player in one game.

We see how the influence of an engine does in fact raise the positive rate as determined by the classifier. From

testing, this comparison appears to be effective at detecting naive cheating. While intermittent cheating is still very difficult to

recognise, the classifier does show some signs of being able to detect it too, but not as clearly as we would like it to be.

We see how the influence of an engine does in fact raise the positive rate as determined by the classifier. From

testing, this comparison appears to be effective at detecting naive cheating. While intermittent cheating is still very difficult to

recognise, the classifier does show some signs of being able to detect it too, but not as clearly as we would like it to be.